Abstract.

Providing an hypothetical synthesis between contemporary evolutionary theories and the main chomskian paradigm, and starting from a comparison among a Code Model of Language (tailored around an integration of Fodor’s L.O.T. view) and an ongoing original prospective about human language, Ferretti (2007) introduces many theoretical concepts. In this paper I will propose acritics of thosesolutions, underlining some uneasy consequences of his theoretical assumptions (with special regards over the notion of “intelligence”) trying, in the end, to sketch a personal proposal.

1.Introduction

Human Language is not totally social driven and conventional, rather its basis is structured beyond a strictly generative process that take part from given set of principles and parameters. With this simple[G1] idea in mind, Noam Chomsky (1959) wiped away most of the behaviorist approach ambitions[1], changing the rules concerning not only research programs in linguistics but also giving an important impetus to cognitive science in its childhood.

From Language then comes the Mind. The “Computational Representational Theory of Mind (CRTM)”, advocated by Jerry Fodor, simply draw a model of Mind tailored around the notion of Language Of Thought (LOT[G2] ). So, just like the predetermined generative Language tailored by Chomsky, the LOT is in some form pre-configured inside the mind/brain and took together by computational relations coded in symbolic form.

Allowing a self-explanatory value to the bare computational part[2], in some way common between Fodor and Chomsky, taking for granted the Chomsky-Fodor alliance[3] permit us a far better understanding over the major problems of what Ferretti (2007) calls the “Code Model Theory” for language. Regarding that, we can now summarize the major points of this solution in seven steps :

i. Human Language is a complex generative process totally ruled by structured internal principles;

ii. A Language Of Thought, formed by a lexicon of symbols and a computational rule set, grounds the representational mind;

iii. Language structure and Thought structure are isomorphic[G3] ;

iv. Language is an output of the mind by the means of the LOT;

v. The Human Mind is structured on a modular basis, with a central holistic engine taken as main computational core;

vi. Production, recognition and acquisition of language are complex functions of an autonomous, special and itself complex module of the mind[G4];

vii. Language is an output of a special linguistic module of the mind driven by the LOT.

Moreover, summing to this structure a few elements from the Shannon-Weaver (1949) mathematical theory of information[4]gives us a pretty satisfying definition of this type of approach to language and mind:

- Language is a coded output, made for communication of thoughts, of a special linguistic module of the mind driven by the LOT and ready to e decoded by a listener granted with the linguistic faculty[G5].

Given those assumption, and taking definition 1 as good, there are some direct consequences, stressed by Ferretti, that needs to be considered to be able to evaluate the Code Model proposal:

i. Taking for granted an autonomous set of primitive principles and rules may lead toward a theory that make human beings special over the rest of nature. Is it possible to find an agreement with contemporary evolutionism[G6]?

ii. The Code Model describe language as subordinate to Mind, and in particular to the LOT. Is this kind of isomorphism parasitical or a valid resource to our purposes[G7] ?

iii. The Code Model advocates a syntax-centric notion of “literal meaning” (Katz, Fodor, 1963) where is only the “direct” content of a linguistic expression, conveyed by information, that builds up its interpretation modalities. So by this account meaning is completely context blind and in comes as an outcome of a strictly mechanical coding/decoding function.

Length and time issues prevent me to catch the entire spectrum of his dissertation, so I concentrated myself on some aspects trying to remain focused on the topics that I think are more significant and hence more problematic. In the next two sections I will consider each of the Ferretti’s arguments listed above, exposing his critics and taking into consideration a short personal answer to them. As a conclusive note, I will try to sketch some personal ideas about the topics discussed regarding some of my previous studies in Computer Science and Philosophy of Information.

2. Evolutionism Encoded

Chomsky, defining Generative Linguistics since Syntactic Structures in 1956, explicitly refuses some of the assumption and consequences made popular by Darwin Evolutionism. Arguments as the “Poverty of Stimulus”, described above, and the “Structure Principle”[5]deeply ground some serious reason to believe that evolutionistic thinking has its limits, and that maybe human language is one of them. Continuous experiment and empirical evidence, as creolization processes[6] and studies in language acquisition[7], are providing us with more and more reasons to consider the Chomskian arguments as quite solid. As we can see Generative Linguistics poses some quite striking issues just as it is, so I will focus on it just for the moment, leaving the LOT integration for further analysis.

What Ferretti, a linguist himself, proposes is to close the gap between Chomsky’s theoretic account and Evolution theories making three kind of assumptions:

i. Language is a complex by product of a larger process of evolution based on an “adaptive equilibrium” (equilibrio adattivo) heuristic. Complexity arises only from a process of evolution, driven by an environmental pressure (Bloom 1998);

ii. Adaptive equilibrium is itself grounded on the notion of “cognitive struggle” (sforzo cognitivo), that qualifies every adaptation to environment as resulting from an act of “intelligence” (intelligenza) that overcome the environmental pressure[G8] ;

iii. Intelligence is a function that maximize “pertinence” (pertinenza): the equilibrium between the resources involved and their effect[G9] . Maximizing pertinence means to optimize information processing and treatment. The best pertinence is always a signal of intelligence and leads to the most ingenious adaptation.

As we can see, “adaptive equilibrium” is a clear tentative of overcoming Chomskian repudiation of evolution by adaptation, introducing a fundamental notion that does so by surpassing the structure dependence principle. Indeed, if Language has been built up by an evolutionary process, then we have to admit that grammatical structure is radically non-fixed and that environment plays a role in the evolution of the whole linguistic faculty. This means that we assume a new incremental dimension for syntactic structure, maybe supposing an intermediate complexity step between primitive and contemporary grammar (Jackendoff 1993), and some new modalities for semantics too[8].

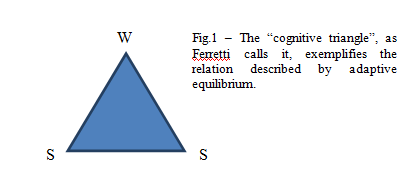

Ferretti choses to involve the evolutionary subject inside of an extended context that presupposes a triadic relation, advocating an ecological intervention over construction of the main linguistic faculty that entails a relation between the speaker and the world, but also a relation between speakers introducing a social element.

Leaving apart the social integration present in this model, in which regard I don’t really have something to say and that simply distinguish from a social and an ecological context. What really is important to remark is that the particular outcomes of this kind of evolutionary analysis are exclusively mediated by the pretty powerful notion of “intelligence”.[9] If language is resulting from an adaptation that comes from our capacity to “intelligently” allocate our cognitive resources, given complexes of ecological stimuli organized by the means of the “cognitive triangle”, then there is no difference between us and the other kind of biological beings that populate our planet. Language is nothing more than the result of a particular “cognitive struggle” evolved by successive adaptation over generations of proto-humans and fully fledged human beings. This long process of evolution had the final outcome in our modern languages, configuring universal grammar (UG) as it is now[G10] [G11] .

What is evident with Ferretti’s account is it’s continuity with Generative Grammar as it is, howevertaking distance from the programmatic assumption especially supported by Chomsky (Chomsky 2005). So what Ferretti saves from Generative Grammar is it’s foundations over a collective of primitives and principles that contributes at the strong recursive feature shown by language production. Ferretti tries to find a way to not impose those primitives and principles by founding an evolutionary process able to develop it, but what really does is only moving it to a further place. As a matter of fact, given that intelligence is what drives adaptation, is indeed “pertinence”, linking the organism with its adaptation, the notion that really needs to be clearly and deeply explained.

If pertinence is “an effect of the processes of effectiveness maximization of information treatment”[10] that leads toward a “positive cognitive effect”[11]regarding an equilibrium with resource consumption, hence it’s quite fair to ask two questions:

I. What’s a positive cognitive effect?

II. How is pertinence built up?

Maybe we can identify a positive cognitive effect with a portion of an adaptation, or maybe to an adaptation itself, but then what drives this adaptation would be “intelligence” by the means of adaptive equilibrium. Now please note that if we would be tempted to ask ourselves the same question about “intelligence” our answer would be that intelligence in driven by pertinence maximization over stimuli collected by the means of the cognitive triangle, giving start to a serious theoretic loop.

Finding an answer to the second question proposes some further issues. Pertinence is itself a function that makes use of information, so even if we don’t account for a quantitative theory of information that recalls a coding theory, what information needs to be so is some kind of interpretation principle, even the simplest one, that permits to a subject, even a eukaryotic, to discriminate between stimuli. This kind of interpretation principle must necessary be present before the stimulus, otherwise it wouldn’t be a stimulus, so here we have another primitive to account for and no definitive answer to give[12]. Moreover, following Ferretti, pertinence is the base ingredient of intelligence and vice-versa[G12] , so there’s some confusion about what comes first and if intelligence as it is can be translated to a really low level of explanation, reaching those basic biological structures driven by basic rules that Dennett defines as “blind” (Dennett 1995[G13] ).

Also giving this last point, as I said before, Ferretti continues to only delay the solution, exposing his theory to some of the hardest problem that an evolutionary theory has to face[13] and proposing an account that delivers no clear foundation. As it stands now the solution made popular by Chomsky appears to be simply more elegant still retaining the same productiveness.

2. Integrating the LOT

Taking now into consideration the code model in its complete form, integration of UG and Generative Linguistics with the LOT view already illustrated, poses some new issues that extend the range of problems to a more general mind theory. The code model treats Mind and Language as basically isomorphic, but grounds reason for a substantial hierarchy between the two[G14] . The relation between Language and Mind becomes largely parasitical with only the Mind, characterized by the LOT, really retaining some power over Language determination and production.

This pre-emption of the LOT’s Mind over Language is what really troubles Ferretti. Given his evolutionary frame, illustrated above, what he needs is to account for are three new ways of intending the major components of the Mind/Language relation:

i. Language responds to modular organization and is itself a module of the Mind, but we need a notion of module that deems for adaptive equilibrium;

ii. The Mind is strictly representational[G15] , but we have to find a way to define a richer of representation that account for the entire environmental context;

iii. Meaning of linguistic expression has to be determined by more than logic and syntactical (also if we consider both, we don’t find the source of semantic: the evaluation of truth, by a logical point of view, is not enough for speaking about the world; for the same reason, the syntactic of a language is the base with we can use the language, but it don’t speak about state of affair because it is not sufficient condition, even though it is a necessary condition for. Notice that we can say a lot of true propositions, but we can also say nothing about the world) form of the single sentences and utterances. The environment has to be meaningful over grounding of linguistic expression and interpretation of the same.

Modularity itself is not a problem but the theoretical implications, advocated by Chomsky for what concerns language[14] and Fodor for the mind theory part,propose it as being unfriendly with evolutionary attempts of explanation. A module is described as a fixed, high speed autonomous device configured to perform a special task and convey one type of information in the form of a very simple output[15] for the goods of a central computational engine.

Just as it is modularity simply sets to much constraints to be restated in an evolutionary form, actually encapsulating mental structures from environment and not only from one another. The solution may be finding a limit to informational encapsulation: admitting that an integration of different kinds of information, clearly visible in phenomena like music execution or “dead reckoning” (Gallistel 1990, Landau 2002), is possible and then extending modularity to more than peripheric retrieval and analysis of information.

Taking this alternative leads to the choice made by Ferretti, transforming a “dummy” module into a pretty “intelligent” one. Modularity, as Ferretti takes it, involves a large portion of the mind, configuring it as a riddle of concurrent structures mediated by an administration principle that selects the most “pertinent” one given available resources and selective pressures[G16] . The tasks performed by each module is no more strictly elementary and simply involves what came to be useful to the organism during phylogenesis. Therefore here is what configures it as being by some means “intelligent” and related to an evolutionary grounding. Finally, what drives modules economy is nothing more that the notion of adaptive intelligence that we encountered in the previous section.

But Language is not exclusively physical world driven, a theory of its evolution needs to incorporate and account for important social elements that greatly contribute to linguistic experience. Doing so presents the opportunity to upgrade the notion of representation that, in bundle with mind modularity and UG, gives a great contribution to the Code Model Theory.

Fodor’s ideas propose representation as “symbolic structures, which typically have semantically evaluable constituents”[16] and mental processes as “rule-governed manipulations of them that are sensitive to their constituent structure”[17]. What is crucial here is that this syntax-centric definition, directly derived by Chomsky, accounts for a classical notion of literal meaning that doesn’t involve things as intentional information inside of language interpretation, leaving the role of defining meaning to the “truth value”[18][G17] of each symbol and the Logic Form (LF) of sentences.

But we have every days clear evidence that language is rich and particularly social driven. If we understand this social element as its being deeply involved in an intentional kind of system andif language and mind are isomorphic; than we have to conclude that mind is based on some elements richer than the LOT.

What we need now is to find an upgrade to the representational theory presented so far, or at least some modalities that concede evolutionary integration. Ferretti chooses to do so arguing that language is the productof an evolutionary process of cognitive systems that makes evident to the organism the social context in which it is immersed. Language is not only the product of an ecological type in intelligence but also of a social one[19][G18] . The outcome of this process is a meta-representational system that integrates much more information, making cognitive system able to read through intentional states that belongs to someone else. Building up a meta-representational system may leads, after evolution by natural selection, to a complex theory of mind (TOM). This one nothing more than a device able to efficiently elaborate and foresee meta-representations; themselves representations about intentional, hence representational, states of other cognitive beings[20].

Revising now the last notion highlighted by Ferretti permit us to sum up the insights that we manage to gather so far: Mind is formed by numerous and smart[G19] modules that compete versus each other following adaptive equilibrium principle; integration of pertinent information in representational form contributes to developing a meta-representational system that, relating social and ecological intelligence, contributes to the evolution of a theory of mind and hence of language as an intentional communication system. Given this framework the revised notion of linguistic meaning that Ferretti gives us sounds like this:

i. Meaning[21] is introduced by the modules and here partially elaborated following principles and mechanics philogenetically derived;

ii. Meaning is sensitive to the context of the linguistic expression conveyed by language and hence able to integrate intentional information;

iii. Meaning has a correspondence to our sensorimotor system grounding itself on our world interpretation rule set, otherwise called concepts. (Talmy 1983, 2000, Jackendoff 1983, Landau &Jackendoff 1993, Landau, Smith & Jones 1998, Soja, Carey &Spelke 1992).

iv. Meaning is not the product of a mechanical encoding/decoding function based on pure syntax.

Accepting so we are able to state a simple definition of the main Ferretti’s conclusion:

- Language is a product of a modular and meta-representational mind by the means of adaptive equilibrium.

Again, looking at this framework, what’s crucial to the entire analysis is the notion of intelligence taken as primitive. It doesn’t matter if it is social or ecological, it’s heuristic value is pervasive and involves the full spectrum of the theoretical tools used in the entire argumentation, without composing an accurate definition. Sure its negative effects are much less accentuated than the circularity consequence found on the preceding section, but its formal vagueness seriously undermines the entire theory[G20] .

Moreover favoring explicative adequacy over productiveness and accuracy leaves some question unanswered:

I. How is pertinence obtained?

II. Does the notion of positive cognitive effect, produced by pertinence, lay on an adequacy principle?

III. What made social information pertinent?

IV. How does linguistic meaning technically builds up?

V. What’s the form of a meta-representation?

These are only some of the questions that need to be answered in order to obtain a satisfactory theory. Sacrificing deep technical insights of this sort Ferretti leaves some ground to the Code Model Theory and this one, with all its limits, still provides deeper hypothesis over such topics.

3. Does“coding” a sounds dirty ?

Despite of strictly theoretical difficulties, what comes to be evident is that purely computational approaches still retain some sort of unappealing consequences. But we have to ask ourselves if it’s an endemic problem of computation theory, coding theory and information theory, or a problem of the plethora of speculative theories that used them in order to reach their conclusions. Encoding, for example, is nothing more than a process that assign to an available state a discrete value given a source of information. So in its very elementary form we can think an encoding/decoding process as a meaning assigning function. Is this process dumb and blind ? Not dumber than a morse code, and it is still communicating human language. Surely that’s not enough to account for complex phenomena as language and human thought, but we may think as encoding like the minimal element of a much more complex structure[G21] .

Encoding is a process that involves an algorithm and a code[G22] . The algorithm is a set of mechanical rule that execute the function and the code is the “language” that the algorithm “speaks”. The code is composed by the terms that the algorithm has to describe the source emitting data, so the complexity of this “description”, or more technically “word”, is totally defined by the code taken as potential output. By the other hand the algorithm carries on the task of integration: more computationally efficient and effective algorithm can integrate more information and much complex information, giving form to new and more powerful kind of encoding processes.

The way information is encoded defines its content richness and its potential development, actual encoding otherwise determines its final outcomes. So what I propose is that human faculties are not the product of different kind of information or structures competition, but of a complex and integrative way of encoding information. Information is initially encoded and then computed in a hierarchical structure by the means of a “code” complex enough[G23] to permit us to experience the richness of life. What we need to find is if the brain is a pretty special computational machine, or else it has found some incredibly efficient algorithms.[G24]

I think that this type of account can be treated evolutionary and that it can find some grounds on a neurological basis[22], but is certain that can found in computational linguistics some insights about what can be computationally done. As an example we can provide some research line to reproduce with a computer the elements that compose human irony (Hye-Jin and Jong C. Park 2007) and suppose the localization of human language in the order posed by Chomsky Hierarchy [23](Chomsky 1956).

What is sure is that if we want to avoid redundancies and too much speculative work, we need to search for simple but powerful principles, that are potentially able to explain complex phenomena. Doing so we have only to follow Dennetts warning and don’t fear Darwin dangerous idea[G25] .

References

Adriaans& van Benthem, “Philosophy of Information”, North Holland, 2008;

Bear M., Connors B., Paradiso M., “Neuroscience”, Lippincott Williams & Wilkins, 2007;

Casalegno P., “Filosofia del Linguaggio”, Carocci Editore, 2004;

Chomsky N., “Nuovi orizzonti nello studio del Linguaggio e della Mente”, Il Saggiatore, Milano 2005;

Dennett D. “L’idea pericolosa di Darwin”

Ferretti F., “Perchè non siamo speciali”, Editori Laterza, Milano 2009;

Floridi L., “Information”, Oxford Press, 2010;

Guasti M. T., “Language Acquisition”, The Mit Press, 2004;

Jackendoff R., “Language, Consciousness, Culture”, The Mit Press, 2007;

Jurafsky& Martin, “Speech and Language Processing”, Pearson, 2009;

Metzinger T., “Il Tunnel dell’io”, Raffaello Cortina Editore, 2010;

Patterson&Hennessy, “Struttura e progetto dei calcolatori”, Zanichelli, 2010;

Pievani T., “La teoria dell’evoluzione”, Il Mulino, 2006;

Pievani T., ”L’evoluzione della mente”, Sperling, 2008.

Tononi G., “Galileo e il fotodiodo”, Editori Laterza, Milano 2003;

Von Neumann J., “The Computer and the Brain”, Yale Press, 2000.

[1]Here I’m obviously making a really long story very short. Chomsky’s paper has obviously drawn fire from a number of critics, see MacCorquodale (1970) for instance, but what’s really important in this paper, despite methodological issues, is to remark the historical relevance of Chomsky’s ideas.

[2]I’m pretty conscious of making a very brutal assumption. The purposes of the two theories are quite different and hence are the specific computational approach taken into consideration, but I think that, in a broad sense, it’s fair enough to ground these approaches in a comprehensive notion of computation that entails the generative portion of the chomskian linguistic theory.

[3]Following Ferretti, I’m taking this fellowship as a fact, even if both sides often criticize against one another in one way or another.

[4]Language enters here in a theoretical frame where a speaker is treated as a source of information, and where a receiver, the listener, is linked to it by a communication channels. The speaker sends through the channel a linguistic expression, encoded in bits, that will be decoded by the listener by the means of the same coding algorithm.

[5]Grammatical competence is fully mediated by syntax, hence is fully defined by the predetermined structure of syntax that don’t’ follow from experience.

[6] A fast process of crossbreeding between different language that leads initially to a primitive dialect (pidgin) and then produces a full-fledged language that presents some of the characteristics of the parents idioms. This process is often really fast, or just more than usually expected, and it presents great and sudden improvements from one generation of speaker to the next.

[7]Such as lexicon acquisition (Naigles 1990) or linguistic parameters definition in early ages (Werker & Tees 1984, .

[8] Here I am supposing that the evolutionary insights intervening over the phonological portion of the linguistic module are more robust and less problematic than on the other two elements, so I will concentrate of these two, leaving relations between language and evolution and maturation of the vocal apparatus mostly untouched. Interested readers may find useful referring to: Lieberman, Crelin and Klatt (1972) and Liebermann (1984), Locke (1983), Studdert-Kennedy (1991).

[9] If the cognitive triangle is directed over the two speakers then we talk of social intelligence, in the other hand an adaptive relation that favors the speaker-world relation involves an ecologic type of intelligence.

[10] Ferretti (2007), p. 128, “La pertinenza è un effetto dei processi di massimizzazione dell’efficacia del trattamento dell’informazione”.

[11]Ibidem, “effetto cognitivo positivo”.

[12]I think that explaining how life has come to be and how an enormous set of alternatives has produced simple and complex forms of life is the main task of an evolutionary biological theory. In the problem at hand this translates into the task of explaining how sets of primitives come to be, how general principles of life evolve from scratch.

[13]Generation of primitives sets.

[14]For a matter of accuracy Chomsky refer to a “linguistic acquisition module” (Chomsky 1975).

[15]A module is strictly defined by: domain specificity, informational encapsulation, obligatory firing, fast speed, shallow outputs, limited accessibility, characteristic ontogeny, fixed neural architecture (Fodor 1983).

[16] Pitt, David, “Mental Representation”, The Stanford Encyclopedia of Philosophy (Fall 2008 Edition), Edward N. Zalta (ed.), URL = <http://plato.stanford.edu/archives/fall2008/entries/mental-representation/>.

[17]Ibidem.

[18] That’s the kind of semantics that the classical definition of representation is using. Meaning of an atomic linguistic expression is it’s truth value and the meaning of a proposition is the truth value of the atomic expressions that compose it. Taking now the LOT stance: meaning of a LOT string is the truth value of the symbols that compose its. Therefore the truth value of a symbolic representation is its correspondence to a fact of the world.

[19]See note 9.

[20] See Leslie’s “fiction game” (Leslie 1987), Baron-Cohen (1985) and Wimmer&Sperber (1983) for some evidence about this argument.

[21] Here meaning is intended as a set of interpretation rules that accounts for acq uisition and understanding of single terms.

[22] See for some evidence about the presence of sensorimotor representation in the broca area (Metzinger 2010), and some correlation between some kind of aphasia (Broca and Transcortical Motor Area) and sensorimotor coding.

[23]Natural Language is now defined as mildly context-sensitive.

[G1]Non è così “semplice” come idea!

[G2]Che ho sempre ritenuto una mezza stronzata… però magari non lo è.

[G3]Quest’assunzione mi pare difficile da sostenere… perché si danno casi in cui non c’è sovrapposizione tra linguaggio e pensiero. Se con “pensiero” intendiamo l’insieme dei “fatti della mente” allora non per ogni fatto della mente esiste una struttura sintattico/semantica in grado di renderla linguisticamente. Inoltre, qui c’è da chiedersi se l’isomorfismo risiede in un vocabolario in prima persona (ciò che possiamo dire di noi stessi e dei nostri stati mentali) oppure in terza persona. Perché, allora, le cose cambiano radicalmente. Possiamo tradurre tutto il meccanismo mentale in una serie di parole (terza persona) ma questa operazione non è possibile in prima persona. Banalmente, possiamo parlare dei processi cognitivi subintenzionali in terza persona ma non in prima. Dunque, esiste una divergenza evidente tra il vocabolario (un eventuale linguaggio del pensiero) in prima persona e uno in terza. Sovrapporre i due linguaggi è un’operazione indebita perché allora devono esistere delle regole di traduzione tra i due linguaggi ma ciò è molto discutibile giacché non è molto facile tradurre in terza persona il vocabolario dell’Io in prima. Ad esempio: “vedo me stesso allo specchio” implica il riconoscimento visivo di ME in quanto Persona e non solo in quanto stimolo percettivo. Da questo passo, mi sembra che ci sia un problema di ambiguità nella definizione di “isomorfismo tra due linguaggi”. Un altro punto: se i due linguaggi sono isomorfi, allora le regole di traduzione dei due linguaggi consentono una parafrasi perfetta di ogni proposizione del linguaggio 1 al linguaggio 2. Ma quali sono queste regole di traduzione? Esse di che fanno parte? Sono un terzo linguaggio 3 che trasforma il primo nel secondo? Mah…

[G4]Su quali basi si dice che è “un solo modulo” per 3 operazioni diverse? Non ha forse più senso parlare di un’integrazioni di tre moduli in uno che li integri insieme (tre macchine di Turing più una che usa gli in-put dei tre).

[G5]Una curiosità: perché bisogna tenere distinti due livelli di “pensiero” laddove basta solo quello del linguaggio? Non sarebbe più economico da un punto di vista metafisico postulare l’esistenza del solo linguaggio? Il livello del “pensiero” con questa definizione potrebbe benissimo essere una proiezione linguistica (metalinguaggio costruito a partire dal linguaggio stesso). Si tenga presente che ciò è possibile perché il nostro linguaggio naturale consente i paradossi semnatici, vale a dire che non è chiuso, sicché consente di costruire un numero N di metalinguaggi. In questo modo si evita l’imbarazzo di postulare delle regole di traduzione che medino tra i due livelli e che non si è ben capito cosa siano… Inoltre, tali regole di traduizione sono indispensabili (la loro esistenza) perché senza di esse non ci sarebbe un diretto legame tra linguaggio e pensiero.

[G6]Questa storia… perché il linguaggio sarebbe incompatibile con l’evoluzionismo? A parte il fatto che con l’evoluzionismo in molti riescono a dimostrare qualunque cosa (cosa che puzza di contraddizione teorica, giacché da una contraddizione segue qualunque cosa), ma non vedo perché l’acquisizione di un sistema di espressione simile al nostro non sia compatibile con un processo evolutivo che ha previsto svariati milioni di anni.

[G7]Giusto.

[G8]Più che dell’ambiente naturale è l’ambiente sociale… Infatti, se l’uomo fosse stato un animale solitario (come i puma) non avrebbe avuto alcuna ragione per trovare sistemi di comunicazione efficienti. Inoltre, ciò che probabilmente si è creato nella mente umana sono una serie di “cascate di catene causali interconnesse” che hanno generato dei circoli virtuosi informativi che hanno innescato connessioni tra varie aree della mente. Un esempio: la nostra conoscenza linguistica impone una variazione sensibile alla percezione (l’idea della dipendenza teorica). Immagina quanto sia importante questa banalità in un mondo in cui si possono dare nomi per distinguere oggetti velenosi da oggetti buoni! Essi li si vedeva uguali e poi li si vede distinti a livello percettivo!

[G9]Ecco in questo non sono affatto d’accordo. La ragione (ragionamento seriale su quali che siano gli in-put) è molto dispendioso in termini di energia computazionale. Anzi, il motivo principale della pigrizia mentale delle persone è proprio che minimizzano l’energia e scelgono percorsi mentali più brevi del ragionamento seriale. Se ciò è vero, allora è falso che il ragionamento seriale massimizzi la pertinenza. In tal senso, lo studio della pragmatica cognitiva mostra in modo inequivocabile che le persone dicono poco lasciando intendere molto proprio per minimizzare lo spreco. Ma questo comporta, in molti casi, che il livello dell’ottimalità comunicativa sia sacrificata proprio a favore della pigrizia mentale.

[G10]Su questo argomento ho discusso in un articolo presente sul sito, argomentando che il linguaggio è nato prima di tutto come comunicazione di intenzioni e solo dopo come mezzo di descrizione del mondo. In questo caso, mi sembra quasi di poter sposare l’idea della “fallacia descrittiva” proposta dal buon vecchio Austin.

[G11]Dennett, infatti, fa perno sull’idea che tutto ciò che è mentale, o che viene codificato da pratiche di l’elaborazione dell’informazione, deve avere una ragione evolutiva evidente, cioè deve costituire uno scopo vantaggioso. Ammesso che ciò sia vero, dobbiamo presupporre le cause dagli effetti, l’origine di tutti i pregiudizi, secondo Spinoza. Infatti, è dubbio che il linguaggio sia nato con tale scopo, giacché, come si sa, nella maggior parte delle conversazioni non si parla per comunicare ma si parla per parlare, come è reso evidente dalla conversazione riportata e da molte altre (dal mio “La pragmatica: tra verifiche empiriche e discussioni normative”).

Ti faccio osservare che i vari Ferretti, con queste “ricostruzioni ragionevoli” cadono nella falce dell’argomento antievoluzionista: essi sostengono che il linguaggio è stato creato con la funzione di… ma questo è quello che l’evoluzione non fa! Essa crea delle anomalie senza che abbiano una funzione (meccanicismo a-finalistico) e solo dopo esse vengono messe alla prova dell’evoluzione (selezione naturale) e si rivelerà se l’anomalia è fruttuosa o no, ma non prima. Essi peccano, dunque, proprio di adoperare un linguaggio apparentemente meccanico e, in realtà, finalistico (lamarkiano). Di conseguenza, se il linguaggio è compatibile con l’evoluzionismo, allora non è stato “creato” con nessuno scopo particolare, ma ha assommato molte funzioni nel tempo, il cui vantaggio principale è stato quello di porre un ponte tra due menti.

[G12]Mah…. Se due soggetti S(I) e S(II) processano la medesima informazione (in-put identici) perché possono fornire due out-put molto diversi, anche a parità di credenze precedentemente assunte in memoria? Infatti, si può ben assumere che la possibilità sia concreta. Se entrambi possiedono il principio di massimizzazione della pertinenza (assunto come base dell’intelligenza) delle due l’una: o uno non la possiede o la possiedono entrambi, se entrambi la possiedono allora dovrebbero avere lo stesso out-put sulla base dei medesimi in-put. Il che mi pare solo una possibilità rara. D’altra parte, se così non fosse, saremo ancora fermi alle frecce e alle lance né avremmo bisogno di molto tempo per elaborare risposte profonde a problemi reali e teorici giacché saremmo sempre d’accordo.

[G13]Ma la spiegazione di Dennett è molto più raffinata: il fatto che ci siano varie agenzie cognitive esclude a priori la possibilità di un’unica via di processazione… e, sostanzialmente, esclude anche una considerazione della pertinenza della processazione intelligente in questo senso…

[G14]Se c’è una gerarchia, allora c’è un primato o di ordine causale o di ordine inferenziale…. nel qual caso vien da sé la discutibilità di considerare l’isomorfismo a livello metafisico e inferenziale! E allora vien, di nuovo, da chiedersi se tale concezione sia plausibile.

[G15]Ad alto livello, si.

[G16]Molto discutibile. Ho l’impressione che Ferretti cada in questo errore: vedere ciò che egli stesso vorrebbe vedere.

[G17]Ribadisco l’idea che uno può dire solo cose vere senza dire assolutamente niente. Inoltre, le condizioni di verità di un enunciato non sono le condizioni di conoscibilità di un enunciato. D’altra parte, un linguaggio senza riferimento può essere un linguaggio perfetto, ma è anche un linguaggio vuoto. Il valore di verità non dice niente in merito alla verità, in cosa essa consista!

[G18]Che, immagino, non si prodighi di dimostrare in termini rigorosi di che si tratti…Come accade sempre quando si parla di “socialità”, una parola oscura che tutti usano sperando che nessuno gli richieda di specificarne le condizioni e le definizioni.

[G19]Geniale! Se la mente è costituita da un insieme di moduli intelligenti e pertinenti, vien quasi da pensare che anche i deficienti sono intelligenti!Oppure che si possa essere deficienti pur essendo costituiti solo da parti intelligenti!! Ma questo sembra essere un paradosso, a meno che non si sostenga che il risultato globale delle singole agenzie intelligenti determini un risultato deficiente.

[G20]Senza dubbio.

[G21]Cioè una delle condizioni necessarie… Ok.

[G22]Potrebbero essere molti gli algoritmi… Da tenere in considerazione.

[G23]That’s the point! Ma quanto deve essere “complex enough” e quale è la natura di tale “complex” e su quali “simples” è fondata?

[G24]A parte quando cadi in depressione o non capisci nulla di qualcosa e allora l’efficienza si tramuta in deficienza… Da un punto di vista naturalistico, la parola valutativa/normativa di efficienza sarebbe da evitare… A meno che non voglia fornire una teoria normativa esaustiva di essa.

[G25]ok.

Be First to Comment